MNIST数据集

用sklearn下载流行数据集,数据保存在C:\Users\<username>\scikit_learn_data文件夹中,不想更改用户名,可以用%HOMEPATH%\scikit_learn_data直接访问。1

2

3

4from sklearn.datasets import fetch_openml

mnist = fetch_openml('mnist_784', version=1)

mnist.keys()

# dict_keys(['data', 'target', 'feature_names', 'DESCR', 'details', 'categories', 'url'])

二元分类器

SGDClassifier是用随机梯度下降法进行参数训练的线性分类器。1

2

3

4

5

6

7from sklearn.linear_model import SGDClassifier

sgd_clf = SGDClassifier(max_iter=1000, tol=1e-3, random_state=42)

sgd_clf.fit(X_train, y_train_5)

# 随机分类器

from sklearn.dummy import DummyClassifier

性能考核

交叉验证

1 | from sklearn.model_selection import cross_val_score |

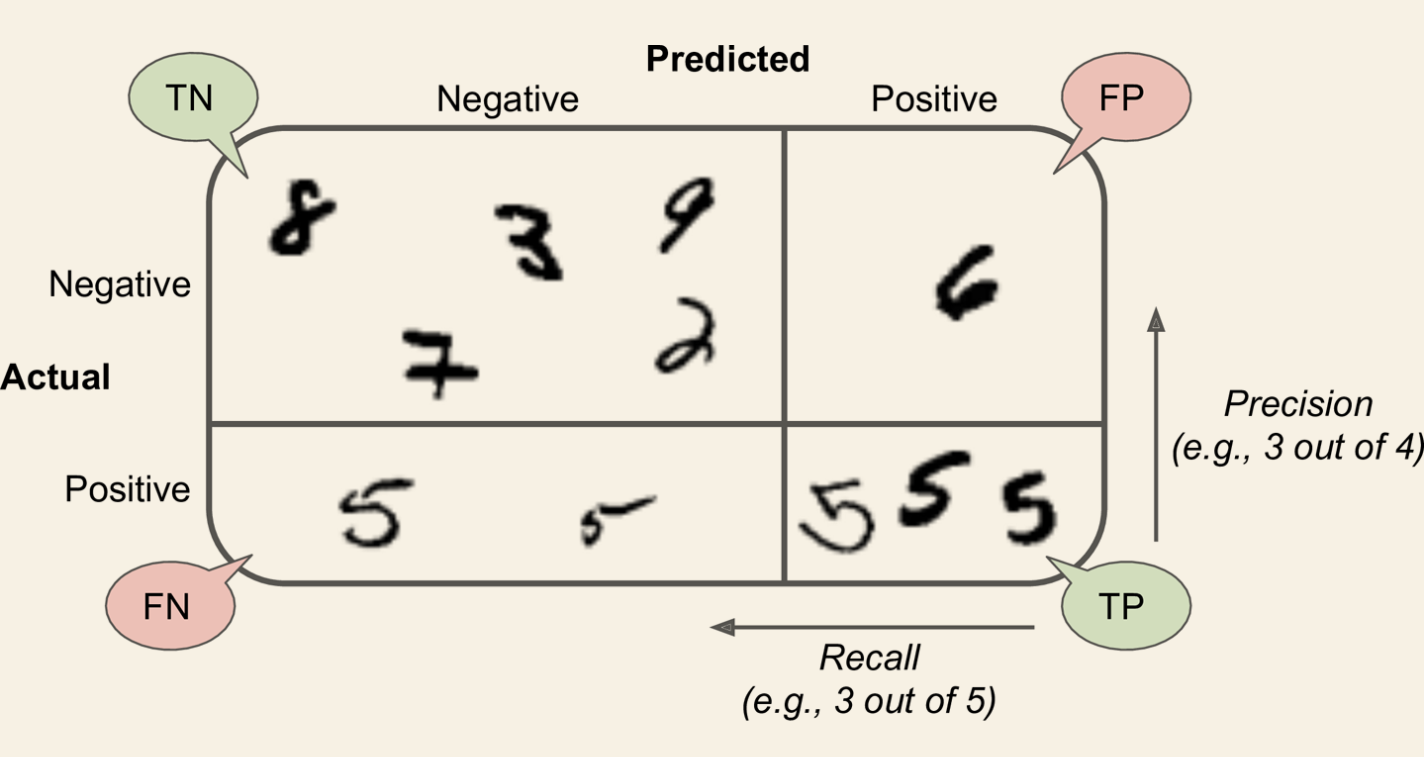

混淆矩阵

所有的Negetive和Positive都是指的预测,True和False指预测对错,对角线上的元素都是预测对的。

1 | from sklearn.model_selection import cross_val_predict |

精度和召回率

1 | from sklearn.metrics import precision_score, recall_score |

$F_1$公式如下:

$$

F_1 = \frac{2}{\frac{1}{Precison}+\frac{1}{Recall}} = \frac{2TP}{2TP+FN+FP}

$$

精度/召回率权衡(PR曲线)

1 | y_scores = cross_val_predict(sgd_clf, X_train, y_train_5, cv=3,method="decision_function") |

ROC曲线

ROC曲线绘制的是真正类率(灵敏度,TPR, Recall)和假正类率(FPR, 1-Specificity)的关系。1

2

3

4

5

6from sklearn.metrics import roc_curve

fpr, tpr, thresholds = roc_curve(y_train_5, y_scores)

# ROC AUC

from sklearn.metrics import roc_auc_score

roc_auc_score(y_train_5, y_scores)

多类别分类器

多类别分类器可以由二元分类器扩展,OVR(one-versus-the-rest, or one-versus-all, OVA)或者OVO(one-versus-one), sklearn会自动选择运行OVR还是OVO,多数时候都是OVR,SVM选择OVO。你也可以强制指定选用哪种方法。1

2

3

4from sklearn.multiclass import OneVsOneClassifier

ovo_clf = OneVsOneClassifier(sgd_clf)

ovo_clf.fit(X_train, y_train)

len(ovo_clf.estimators_) # 45

但是有些分类可以直接进行多分类,无需OVR和OVO。SGD就可以。

错误分析

通过混淆矩阵,分析某些错误实例。

多标签分类

结果输出多个标签,而不是一个。KNN支持多标签分类。1

2

3

4

5

6

7

8

9from sklearn.neighbors import KNeighborsClassifier

y_train_large = (y_train >= 7)

y_train_odd = (y_train % 2 == 1)

y_multilabel = np.c_[y_train_large, y_train_odd]

knn_clf = KNeighborsClassifier()

knn_clf.fit(X_train, y_multilabel)

knn_clf.predict([some_digit]) # array([[False, True]])

多输出分类

多标签的泛化,标签可以多种类别。1

2

3

4

5

6

7

8

9# 图片降噪示例,输出是图片的向量

noise = np.random.randint(0, 100, (len(X_train), 784))

X_train_mod = X_train + noise

noise = np.random.randint(0, 100, (len(X_test), 784))

X_test_mod = X_test + noise

y_train_mod = X_train

y_test_mod = X_test

knn_clf.fit(X_train_mod, y_train_mod)

clean_digit = knn_clf.predict([X_test_mod[0]])